Random Manipulation of a Random SmugMug Image

This is a process that runs daily and applies a random number of transformations in a random order with random settings to a randomly selected image from my SmugMug site. You can see a list of the results that are currently available. I started to try to do the math as to the number of possible outcomes and…it’s beyond me. A lowball, simplified bit of math got me to north of 500,000 quintillion different possible results as of the initial rollout, and that doesn’t factor in a number of things that would increase the number of possible outcomes. That’s what I wanted: wayyyyyy more than anything I could power through the “all.”

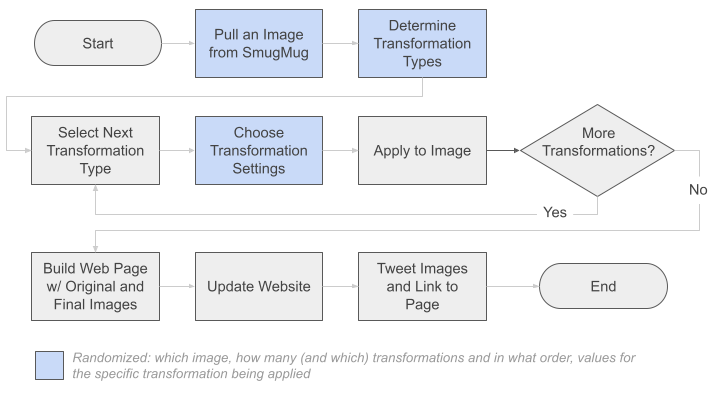

High-level, this is what happens (hurray for simplified flowcharts!):

Let’s pretend I sketched the above out before I wrote the first line of code and then just went step by step through building out the process. It wouldn’t be an unreasonable leap, actually. As I reflect on it now, I pretty much did have this basic sequence of steps in my head, and I did build out the code almost exactly from start to finish in sequence. Wow! Accident, or just a failure to get creative along the way? Who can say?! Read on for details!

In Video Form

I entered the project in the “Golden Punchcard” at Superweek in Hungary early in 2022. It wasn’t a contender, but it gave me an excuse to create a brief presentation to describe the project and the results. I recorded a shortened version (8.5 minutes) of that presentation afterwards, which you can watch below if you’d prefer that to reading about the details.

The Motivation

This project doesn’t qualify as generative art (or generative “aRt,” specifically, but if you’re interested in that sort of thing, check out Danielle Navarro’s amazing work or Claus O. Wilke’s work—including his NFTs!—or Koen Derks’s aRtsy package that is specifically ggplot-oriented).

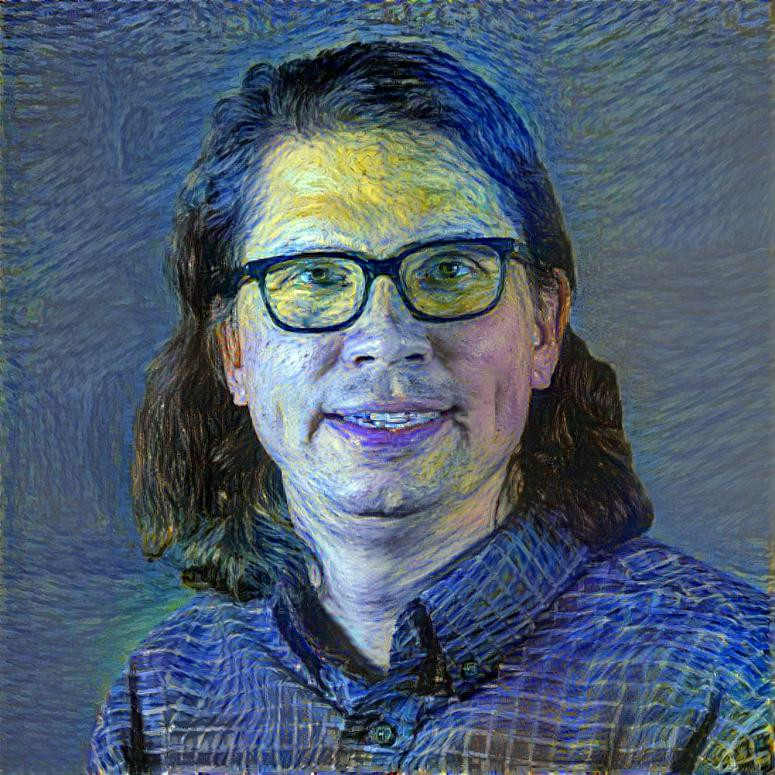

Rather, it was an opportunity for me to combine a couple of my hobbies—nature photography and R—into a single project and learn along the way! When it comes to doing programmatic (machine?) manipulation of photos, this is nothing nearly as involved as app.wombo.art or the neural networks employed by Deep Dream Generator, but, hey, this is me combined with Starry Night in an AI’s dream:

Cool? Disturbing? Who’s to Say?

The learning that came along with the effort was:

- Cloud-based automation: I initially thought this would be Docker and the Google Cloud Platform, but, ultimately, it wound up just being GitHub Actions, which turned out to be cheaper and, arguably, simpler. I’ll take it!

- Using APIs without a platform-specific package: I’ve known that

httris the go-to package for working with REST APIs, but I had never used it directly from authentication through actual GETs. Do I fully “GET” it now? Hard to say. I often feel like I’m living a few years in the past on that front. - Image manipulation: Raster-based images are, at their core, just matrices with each pixel being represented by a value in the matrix. It turns out that it’s fun to think about exactly what the underlying matrix manipulation is that adds different effects to an image. That made for multiple interesting (to us) conversations with my son. Although he does stuff like this, so maybe he was just humoring me.

- Static site generation: This wound up being Hugo along with Netlify (with GitHub along the way). This all clicked for a magical 7.5 minutes, but only time will tell whether I’m able to make it stick.

Somewhere in all of that, I got to work with GitHub Secrets to securely store API tokens for SmugMug and Twitter!

Below, I’ve broken down the different components of the work, linking to specific resources for additional details. But, the full set of code really comes down to:

- A folder in the GitHub repository that serves this site (

master.Ris really the key file; it sources all the other files) - The GitHub Action workflow YAML that is also part of the repository that serves this site

Let’s dig in a little deeper to explain the what’s what for all of this. Read on if you’re interested.

Pulling a Random Image from SmugMug

As of this writing, there is not yet a package built specifically for connecting to SmugMug, so I worked through their pretty comprehensive API documentation and then used the httr to actually query the API.

Although I was only pulling public images (from my personal SmugMug site, using the API still requires creating and using an API token (specifically, a key and a secret). While doing the initial development, I simply put these in my .Renviron file as SMUGMUG_KEY and SMUGMUG_SECRET and then accessed them using Sys.getenv(). When I shifted to running the script via a GitHub Actions workflow, I simply created GitHub Secrets with the exact same name. And…it worked! I was expecting that I was going to need to update the R script to actually access those values differently when they were set as GitHub Secrets, but, nope! As the workflow ran and established an environment, those values were there and were accessible with the exact same code.

options() setting for blogdown and an install_hugo() command that I probably should be able to remove, but I hit my “if it ain’t broke…” threshold, so it’s staying for now. Thes are just a couple of lines in master.R and they cause no harm whatsoever when running locally. Overall, I was pretty tickled that this is how things worked out.

What I wanted from SmugMug was pretty simple: a data frame that lists every image on my site. There is no, “Give me all the images” direct API call, so it took a two-step process:

- Pull all of the albums on the site

- Go through each album and pull each image in the album

I accomplished this with a little bit of recursion. The code is pretty straightforward and contained in the init_get_list_of_images.R script.

The result was a data frame with one row for each image on the site. So, then it was just a matter of picking a row at random from that data frame using sample() (soooo much use of sample() in this project!) and then tracking down the actual URL for that image and downloading and saving it. I wound up capping the max width of the images to work with at 4,096 pixels just for file size and consistency reasons (since I record the exact transformations performed, it would be reasonably easy to recreate the same effect with a larger file if I wanted to).

This was actually a little messier than I expected, so it got its own script called init_get_random_image.R to perform that work.

That’s the work to actually get the base image. Which is the starting point.

Creating and Applying a Random Set of Transformations

I set this up to be reasonably extensible, in that it is modular with one script file for each possible transformation. Most of the transformations were ones available in Jeroen Ooms’s magick package, which is a wrapper for ImageMagick STL. But, I also have a soft spot for Hiroyuki Tsuda’s sketcher package, as that was the first package I used to do any image manipulation, so I included sketch as one of the transformations, too.

The possible transformations that I’ve included so far are (each is in its own .R file):

- [magick] Modulate—this adjusts the hue, saturation, and brightness of the image. The image starts with each of these three values as “100,” and then the script randomly selects values between 50 and 150 to apply to the transformation

- [magick] Median—this is an interesting one. The only input is a “radius” value, for which I use a random value between 15 and 50. Then, for each pixel in the image, the transformation looks at all of the pixels within that radius of the pixel and changes that pixel’s color to be the median color of all of those pixels. This winds up mostly blurring the image…except not quite. If there is a very hard, high contrast edge, that tends to stick around (it’s median and not mean!). This is one of the more time-consuming transformations, which makes sense.

- [magick] Colorize—this takes a color (which I randomly set by choosing random R, G, and B values) and an opacity and overlays that on the image. It, essentially, tints the image with a random color and to a random degree.

- [magick] Quantize—this reduces the image to a limited number of total colors, which I’ve set to be somewhere between 4 and 16. In some cases, earlier transformations will have already converted the image to be grayscale and this has no actual effect. In other cases, it does!

- [magick] Sketch—this is another somewhat process-intensive operation that makes a “sketch” of the image. The settings for it are the overall style (1 or 2), the lineweight (1 to 6), the contrast level (10 to 60), the amount of shadow (a real number between 0 and 1), and the gain (a real number between 0 and 1)

The scripts for each of the above do a few things:

- Select random values to be used for the settings

- Apply the transformation to the current version of the image and then update that as the current version

- Add to a string that records the specific settings that were used

The question remains: what transformations are applied and in what order? This is simply a case where there is a tibble with the paths to each of these transformation scripts in the master.R script. The script:

- Shuffles the order of the rows in the tibble.

- Selects a random number between 1 and the number of rows in that tibble.

- Pulls that number of rows from the tibble.

- Runs the scripts (the paths) that were pulled.

I think it’s actually reasonably elegant design-wise (if I do say so myself). All that I need to do if I want to add an additional transformation to the mix is to create a new file for that transformation and add the path to that file to the original tibble.

Creating a Web Page with the Before/After

This is a pretty vanilla Hugo site, although it’s built and managed with blogdown, so the main references I used were the blogdown site and the Hugo site.

The main things that took me a while to figure out were:

I just needed to make a dedicated folder under the ‘content’ folder and put all of the scripts and details there. This is a sibling folder of the default

postfolder, which is where straight-up posts (like the one you’re reading now) get built)I needed to create an R script that would actually build the .Rmd file that would display the before and after images and the transformations applied. That script is here, but this short video from CradleToGraveR was really helpful in getting the details right on that.

When building that .Rmd file, I included a couple of handy things in the YAML header for when I built my list template (see the next item): a

typeparameter that simply populated with “image-randomization,” and thenimg_originalandimg_transformedparameters that contained the relative paths to the original and final/transformed images.I had to read/watch the Hugo documentation on list templates a few times to fully grok them, but this allowed me to make the page(s) of thumbnails showing before and after images. The source for that is in the

/layouts/image-randomizefolder, and the path and the filename for that are key. There is logic in that template that says to “just use the files that are of a”type” (from the YAML header) of “image-randomization” and then use theimg_originalandimg_transformedvalues to actually create the thumbnails. This…is pretty cool! (There is some inefficiency with the images themselves, as it currently just changes the dimensions of the images, but it is loading the full images, so the page weight is heavier than it needs to be; that’s a fix for another day)

Tweeting the Result

The wrinkle with tweeting the result is that the tweet shouldn’t occur until after the web page with the results are published. That means the repo has to be committed and pushed back into GitHub (via GitHub Actions) so that Netlify is triggered to actually rebuild and publish the updated site. This happens pretty quickly—a few seconds for the GitHub commit and push, and then less than 30 seconds for Netlify to republish the site.

I could simply have a process whereby a daily tweet has a broken link for less than a minute. While I’ve certainly cut a few corners here and there on this whole effort, having a design where I’d be tweeting a broken link–even if only temporarily so–was going to be too much for me to stomach.

So, instead:

A couple of lines of code at the very end of the script that builds the

.Rmdfile for the web page create a list with three items: 1) the ultimate URL for the new page, 2) the path to the original image, and 3) the path to the transformed image. The script then writes that to a file usingsaveRDS.The GitHub Action workflow YAML sleeps for 5 minutes (which I may shorten), loads up the various keys and secrets (stored as GitHub secrets in the repo) needed to access the Twitter API, and then calls a script that reads the RDS file and tweets the result.

The inaugural tweet of this process is here.

Automating All of This

The automation, as already noted, used GitHub Actions. This turned out to be a little bit easier than I expected, but it still took quite a bit of trial and error and Googling. The end result is simply having a YAML file posted in a .github/workflows folder of the repository used to build this website.

My primary source for figuring out how to get this working was a detailed post by Simon Couch. The main way I wound up diverging from Simon’s post is that, ultimately, I did not make the script into a package. I actually initially did…sort of…and it worked, but it wound up being unnecessarily clunky.

I suspect that, in many cases, the package route is the way to go. But, in my case, I was trying to run the script in a repo that actually generates and publishes content to a website (this website). It felt…weird…to try to nest a package inside that repo. I gave it a shot, but, ultimately, realized that I was really only using the DESCRIPTION file to get the requisite packages installed. That’s it. I didn’t seem to be getting any other benefit out of packagifying the work. And I’d introduced filename restrictions and an unnecessarily messy file hierarchy in the process!

Additional digging turned up a helpful post by Athanasia Monika Mowinckel that included details on how to install packages directly within the GitHub Actions workflow.

I still ran into a few wrinkles with, for instance, libcurl-dependent packages (including httr!) not installing until I had manually installed libcurl first (within the workflow). I had the same issue with libmagick. So, that section of the YAML now looks like the following. Ultimately, the install.packages() was really the only reason, I think, for setting the script up as a package…and that seemed like overkill (admitting that I have no control over the version of each package being installed with the approach below; we’ll assume backward compatibility of future CRAN versions).

- name: Install libcurl (for curl/httr)

run: |

sudo apt-get install libcurl4-openssl-dev -y

- name: Install libmagick (for the magick package)

run: |

sudo apt-get install libmagick++-dev

- name: Install R packages

run: |

install.packages(c("dplyr", "purrr", "httr", "knitr", "jpeg", "magick", "sketcher", "scales", "blogdown"), Ncpus = 2)

shell: Rscript {0}My debugging process for the workflow was to change the cron schedule to be 0 * * * *, which had it run hourly. It would takes ~20 minutes to run if it doesn’t error out, so that then gave me a solid 20-30 minutes to debug (the workflow logs on GitHub were super helpful there—very easy to track down exactly where in the workflow the error occurred and what the error was, which was then generally readily Google-able), tweak/debug and commit and push updates.

The “20 minutes to run” initially surprised me, as the script itself runs in 1-3 minutes locally (depending on the transformations being applied). But, it totally makes sense: each time the job runs, it’s installing R and then installing the requisite packages (this is why I’m installing and loading simply dplyr and purrr rather than tidyverse), and then actually running the script. I suspect this is one of those things that would be more efficient if I was actually doing this through Google Cloud Platform with a containerized image. But that’s an exploration for another day.

There are definitely still some aspects of the YAML that I don’t fully understand. I tried to selectively remove things that I didn’t think were necessary for my purposes, but the overall syntax is still a bit beyond me.

Some Examples

A few examples that are at least in the general direction of interesting (but you can see everything that has been generated here:

|

|

|

|

|

|

|

|